Seigow Matsuoka, Naoko Tosa: Inspiration Computing Robot

Artist(s):

Title:

- Inspiration Computing Robot

Exhibition:

Creation Year:

- 2005

Medium:

- Art installation with robot

Category:

Artist Statement:

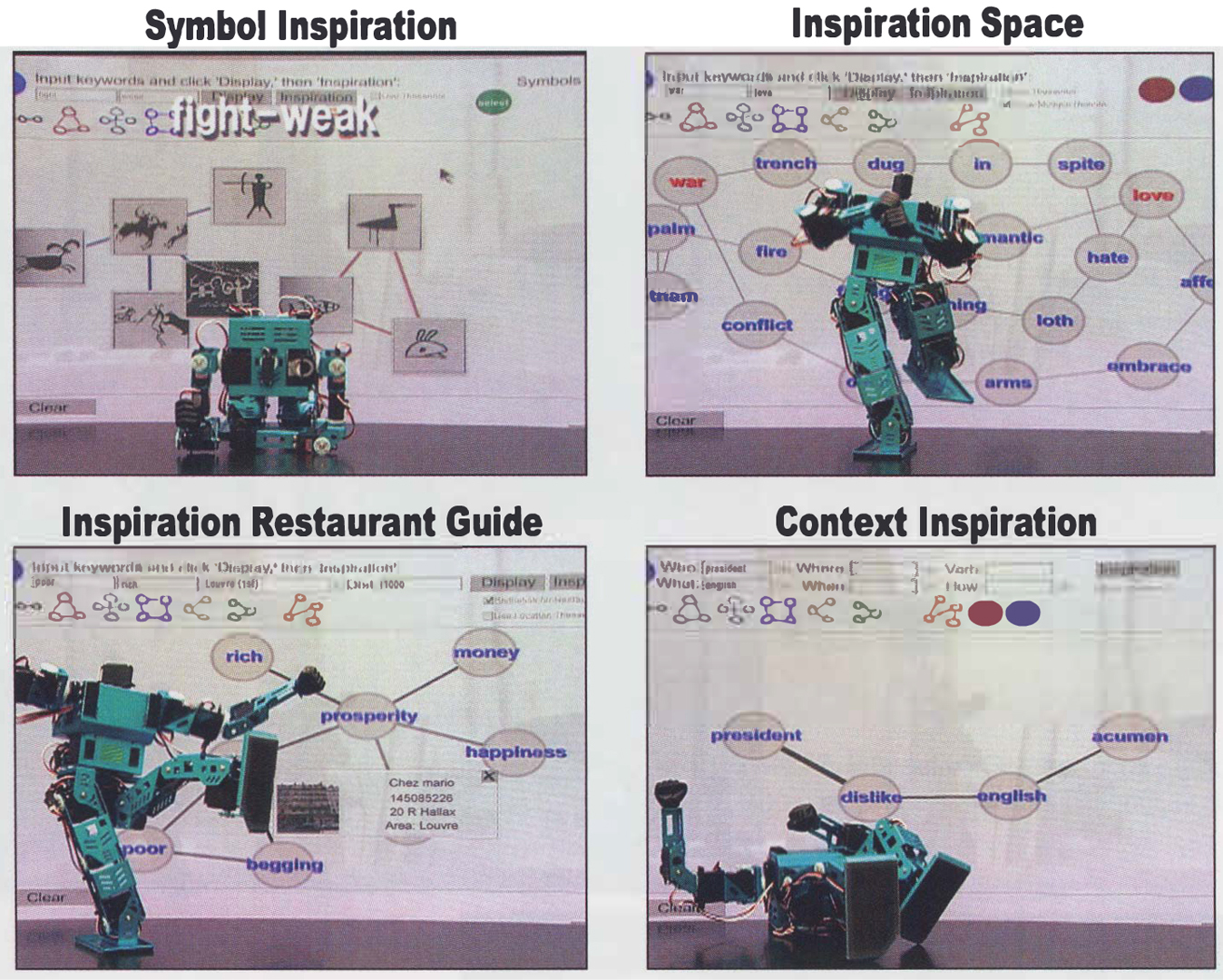

The goal of this project is to generate interactive literature and scripts for interactive cinema. Narrative is most vivid when emergent technologies are born. “Emergent” means when a product or idea, in the course of its advancement, breaks through a critical barrier and a heretoforeunimagined paradigm appears. The trick to finding this kind of “emergence” is “daring to pursue the marriage of completely different ideas.” As methods for computing inspiration, we used “thoughtforms” (concatenation, balance, division, unification, crisscross), a “psychological thesaurus,” and “chaos search,” which uses a chaos engine to add a swaying element within the word relationships. We built the following software for to generate inspiration context:

1. Inspiration Space This system discovers the hidden connections between words. It determines that a connection between words exists if two words are found in the same thought-form or make up a stimulus-response pair in the Edinburgh Associative Thesaurus. Then it finds several connections between the two words by tracing a large set of possible paths between them, so that the paths traverse several two-word connections. If the chaos engine is in an appropriate state, a preference may be added so that longer paths are displayed, or so that the paths are forced to connect through a more distantly connected word. The user may further expand the connections of any word of interest.

2. Inspiration Restaurant Guide A restaurant guide based on the inspiration system was built from France Telecom’s Yellow Pages database. Each of the restaurant categories and locations in Paris was entered into the system and connected to related words (pizzeria: italian food, tomato; creperie: date, sweets; fast food: quick, cheap). Users select (or ask the system to select) a location and input their preferences for restaurant atmosphere. A set of words appears, and users can select any word that appears on the screen. The system searches for a restaurant type closely related to that word, and a nearby restaurant of that type is displayed on the screen.

3. Context Inspiration Naoko Tosa Nihonmatsu-cho, yoshida, sakyo-! Kyoto 606-8501 Japan +81.75.753.9081 Using data obtained from the open database WordNet (Princeton University) as well as manual categorization, the words in the database were classified according to their grammatical properties in six categories: who, what, where, when, how, and verb. Users seed the system with a few idea words. The system then generates a sentence of various lengths ranging from two to five words (minus articles, conjunctions, etc.) based on these input words. Wherever there is a blank space in the sentence, the system fills it in, seeking words inspirationally linked to the words surrounding the space.

4. Symbol Inspiration Rather than attaching symbols to existing word associations, the system applies a set of associations directly between symbols. These associations are based on the thought-forms explained above, where connections are based on geometric forms. Users can seed the engine by entering words linked to images in the input textbooks or by clicking one of the colored thought-form buttons at the top of the screen.

5. Inspiration Blog The blog system allows the system to accept complete sentences as input. Connections between key words in the sentence are all considered, and intersecting words are displayed on the screen. The connections between each entry and the preceding entry are also included, so that the context generated within each entry is continuous.

6. Robot Agent Interface The robot’s emotional expression and synthesized voice are automatically generated in accordance with the inspirational word context. When users interact with the inspiration system, the key words are extracted by the system and converted into behaviors via language- emotion mapping and sent to the robot over a Bluetooth connection.