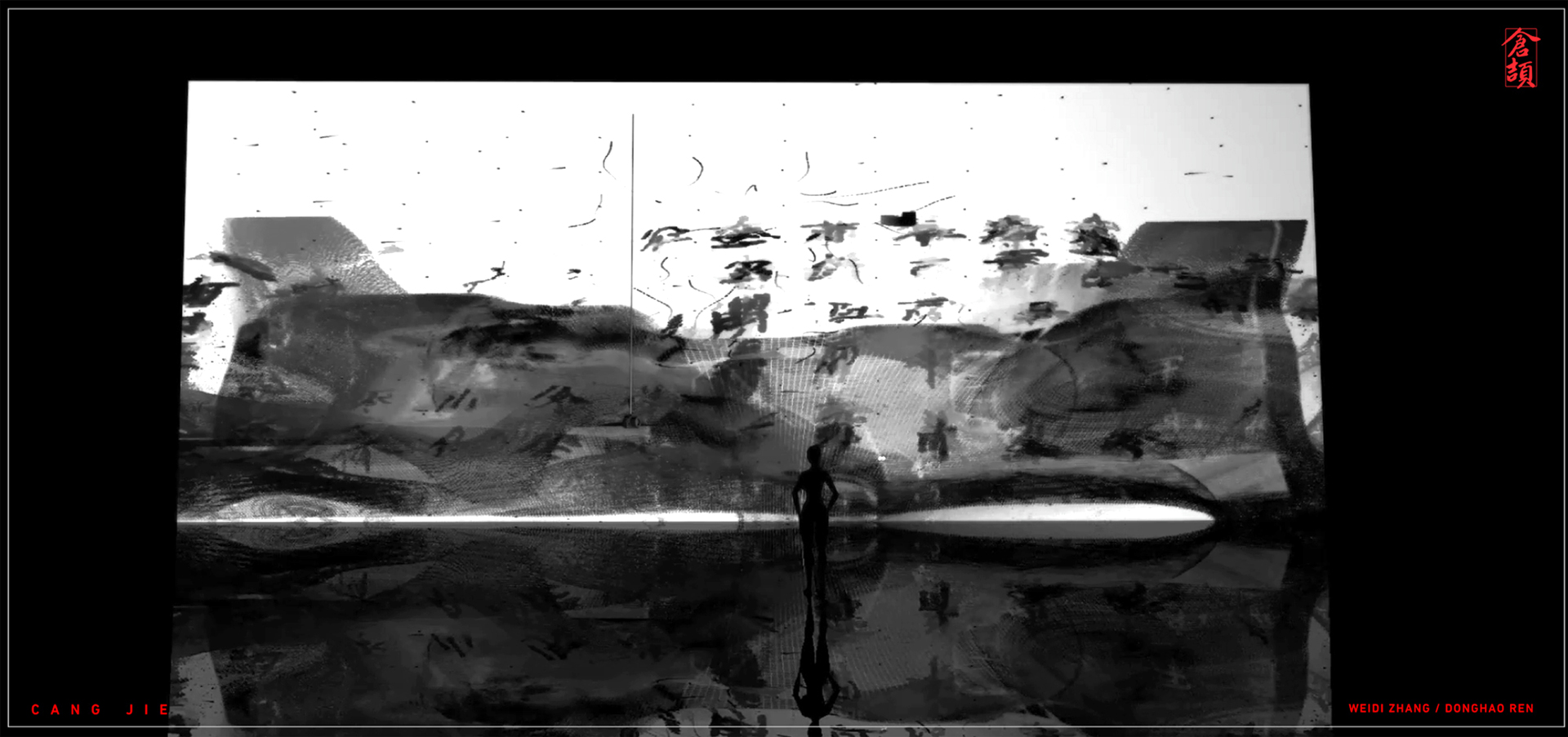

Weidi Zhang, Donghao Ren: Cangjie

Artist(s):

Title:

- Cangjie

Exhibition:

Category:

Artist Statement:

Summary

Cangjie provides a data-driven interactive spatial visualization in semantic human-machine reality. The visualization is generated by an intelligent system in real-time through perceiving the real-world via a camera (located in the exhibition space).

Abstract

Humans and machines are in constant conversations. Humans start the dialogue by using programming languages that will be compiled to binary digits that machines can interpret. However, Intelligent machines today are not only observers of the world, but they also make their own decisions.

If A.I imitates human beings to create a symbolic system to communicate based on their own understandings of the universe and start to actively interact with us, how will this recontextualize and redefine our coexistence in this intertwined reality? To what degree can the machine enchant us in curiosity and enhance our expectations of a semantic meaning- making process?

Cangjie provides a data-driven interactive spatial visualization in semantic human-machine reality. The visualization is generated by an intelligent system in real-time through perceiving the real-world via a camera (located in the exhibition space). Inspired by Cangjie, an ancient Chinese legendary historian (c.2650 BCE), who invented Chinese characters based on the characteristics of everything on the earth, we trained a neural network, we have named Cangjie, to learn the constructions and principles of all the Chinese characters. It transforms what it perceives into a collage of unique symbols made of Chinese strokes. The symbols produced through the lens of Cangjie, tangled with the imagery captured by the camera, are visualized algorithmically as abstracted, pixelated semiotics, continuously evolving and composing an ever- changing poetic virtual reality.

Cangjie is not only a conceptual response to the tension and fragility in the coexistence of humans and machines but also an artistic imagined expression of a future language that reflects on ancient truths in this artificial intelligence era. The interactivity of this intelligent visualization prioritizes ambiguity and tension that exist between the actual and the virtual, machinic vision and human perception, and past and future.

Technical Information:

The methodology of Cangjie consists of three aspects:

1.Intelligent System Design. To convert images to Chinese strokes we use unsupervised learning techniques to model Chinese characters. The learned model is then used to create novel characters based on details in the images. We trained a neural network (named Cangjie) to learn from vector stroke data of over 9000 Chinese characters by using the Bidirectional Generative Adversarial Network (BiGAN) architecture. After successful training, the discriminator and the encoder/generator reach a stable state like a Nash Equilibrium. The network learns a low-dimensional latent representation of these images. Thus, when the live streaming of the real world is processed by the system, the encoder network can produce its latent representation. Then the generator network can reconstruct the image and generate novel symbols based on the given latent representation. The novel symbols are constructed by Chinese strokes but they are not carry specific meanings.

2. Experimental Visualization Using Neural Network Generated Image Data. The image data is firstly manipulated with image processing techniques ( image differencing and alpha compositing ), filter design, and data transformation. Then we used OpenGL shading language (GLSL) to relocate the pixels from real-world texture to a position determined by the image generated by Cangjie. The data of RGBA channels of capture through live streaming will be used to control the movements of pixels. The goal is to create an effect like ink flow that is consistently writing new symbols that Cangjie generated in real time based on the live streaming texture.

3. Spatial Visualization in Virtual Reality Space Using Image Data. The experimental visualization is used as data input to compose a virtual reality space. The datadriven world building strategies mainly consist of two parts: 1. Algorithmic Virtual World Composition: multiple mathematical algorithms are implemented to create a world structure, including the Voronoi diagram (sparse). 2. Texture Development: data-driven abstract patterns and forms are visualized to compose the world by dynamically using arrays of lines, points, curves, photogrammetry point clouds, image data-driven agency, and other image processing techniques.

The described approaches result in the two interactive projections: 1. An experimental visualization of Cangjie writing novel symbols based on its interpretation of surroundings. 2. A real-time VR projection of a virtual world constructed with the novel symbols Cangjie creates.

Process Information:

This VR project provides an immersive exploration in semantic human-machine reality generated by an intelligent system in real-time through perceiving the real-world via a camera [located in the exhibition space]. Inspired by Cangjie, an ancient Chinese legendary historian (c.2650 BCE), invented Chinese characters based on the characteristics of everything on the earth, we trained a neural network that we call Cangjie, to learn the constructions and principles of over 9000 Chinese characters. It perceives the surroundings and transforms it into a collage of unique symbols made of Chinese strokes. The symbols produced through the lens of Cangjie, tangled with the imagery captured by the camera are visualized algorithmically as abstract pixelated semiotics, continuously evolving and compositing an ever-changing poetic virtual reality.

The user interaction is realized in two ways. Firstly, a camera is set in the center of the installation and observes the surroundings. The audiences in the installation will be captured by the camera as live streaming that is processed by Cangjie (the trained neural network) which generates the semiotic visualizations. Secondly, live streaming of the surroundings (including audiences) will be implemented as textures in the VR space and the data of this live stream will determine the particle movements and the ink flow directions in the virtual space. The audiences will be able to see themselves captured as textures in VR space and their movements will alter the appearance of the virtual world.

Cangjie is not only a conceptual response to the tension and fragility in the coexistence of humans and machines but also an artistic imagined expression of a future language that reflects on ancient truths, a way to evoke enchantment in this artificial intelligence era. The interactivity of this intelligent visualization prioritizes ambiguity and tension that exist between the actual and the virtual, machinic vision and human perception, and past and future. By providing a visualization of the novel symbols generated by the machine, the human-machine interaction sustains users’ curiosity and blurs the boundary between precise data-driven design and pure artistic experience.

Other Information:

Inspiration Behind the Project

Our inspiration is coming from an ancient Chinese legendary historian (c.2650 BCE), he invented Chinese characters based on the characteristics of everything on the earth. We think it would be intriguing if we could train an A.I. system to observe the real world and reconstruct the real world using unique symbols just like Cangjie did. Humans and machines are in constant conversations. Humans start the dialogue by using programming languages that will be compiled to binary digits that machines can interpret. However, Intelligent machines today are not only observers of the world, but they also make their own decisions. If A.I imitates human beings to create a symbolic system to communicate based on their own understandings of the universe and start to actively interact with us, how will this recontextualize and redefine our coexistence in this intertwined reality?