Joreg: Imago

Artist(s):

Collaborators:

Title:

- Imago

Exhibition:

Creation Year:

- 2007

Category:

Artist Statement:

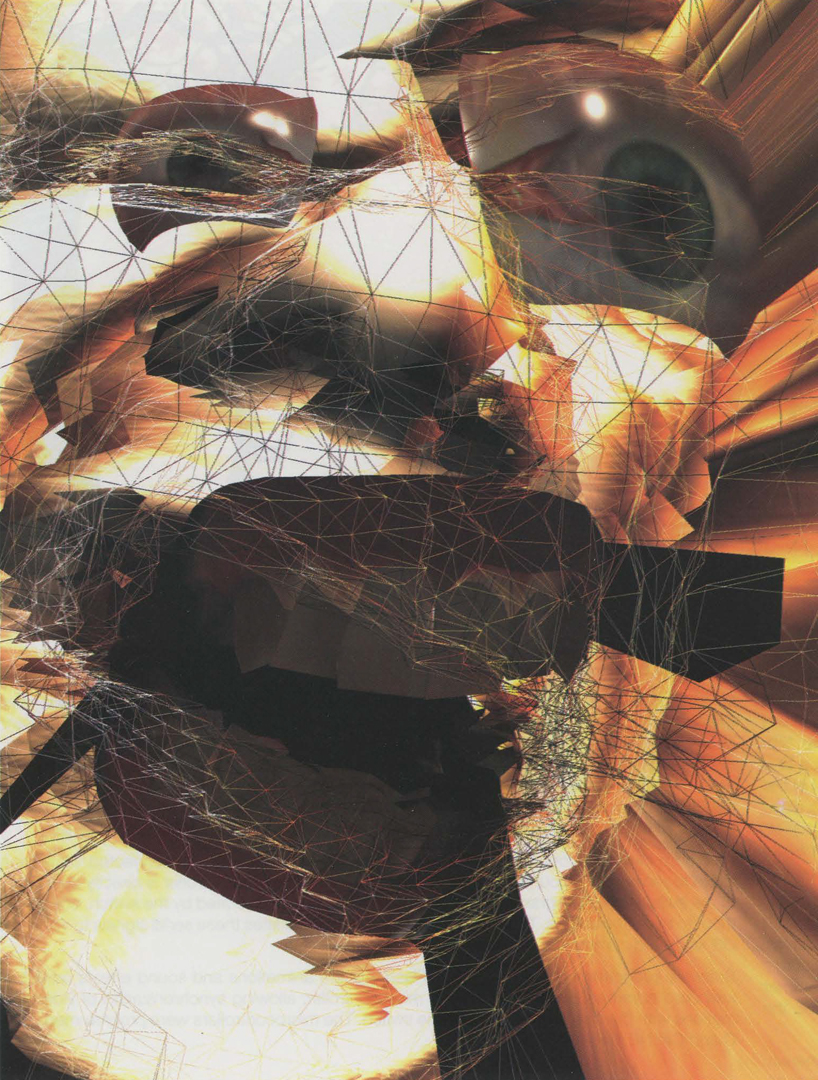

Imago is a piece for a solo vocalist about multimedial representation and reproduction. The singer is reduced to face and voice and stylized as a triptych: in the middle, the real head of the singer is flanked left and right by three-dimensional representations of him. The singer relinquishes himself to the point of self-abnegation. Digital machines dismantle, transform, and duplicate his voice and ultimately merge it in its frequency domain with other sounds. The recursive relationship between voice and machine leads to successive dissolution of the difference between analog and digital sound sources for the

listener and the singer. Although the singer remains outwardly the creator and authority in

charge of the acoustic events, the purported expansion and enrichment of the vocalized expression ultimately turns into manipulation and estrangement. This process of representation, reproduction, and estrangement is continued on the visual level. The digital portraits of the singer begin to develop lives of their own. They cease functioning in accordance with the norms of self-representation. Under the influence of the music, they undergo changes and deformation, raising the suspicion that the deformations represent the singer’s actual situation, which is to say his loss of control.

Technical Information:

The task was to duplicate the head of a live performer and animate its facial gestures in real time. We wanted to be as realistic as possible but also find ways to extend and finally abstract the expressions of the real protagonist. The animation is controlled by a joystick and various parameters extracted from a live soundscape. On the other hand, the sound is generated from prepared beats that are transformed by the singer’s voice and spacialized to a multichannel audio setup surrounding the audience. Audio programming is done using software like kyma and max/msp. For the animations, the performer’s head was digitized using a 3D scanner, which produced a high-resolution but partly buggy mesh. Instead of cleaning this mesh, it was easier to build a mesh from scratch and align the vertices to the shape of the scanned mesh. For the texture, photographs were taken around the head and stitched together. Normal maps and ambient occlusion maps were generated and used in a pixel shader during lighting calculation. Animation is done using 16 morph targets of the head in different shapes. For maximum flexibility during performance, all morph targets were put into one large vertex buffer. A custom vertex shader was then used to animate between any of the shapes in real time. Programming is done with our custom software called vvvv (vvvv.org), a multipurpose toolkit and graphical programming language for real-time video

synthesis.